About Me

Research

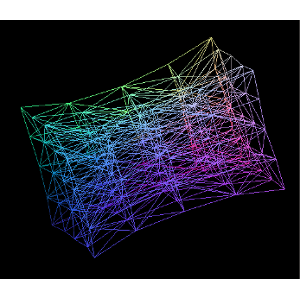

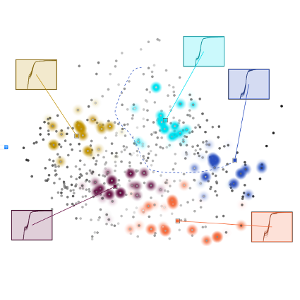

My research combines interaction techniques from the scientific visualization and visual analytics disciplines to study high-dimensional parameter spaces. I ask the question:

"How can we add intuition to complex high-dimensional systems using interactive visualization?"

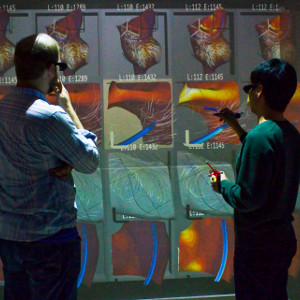

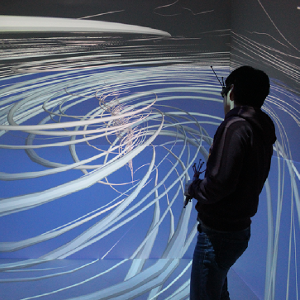

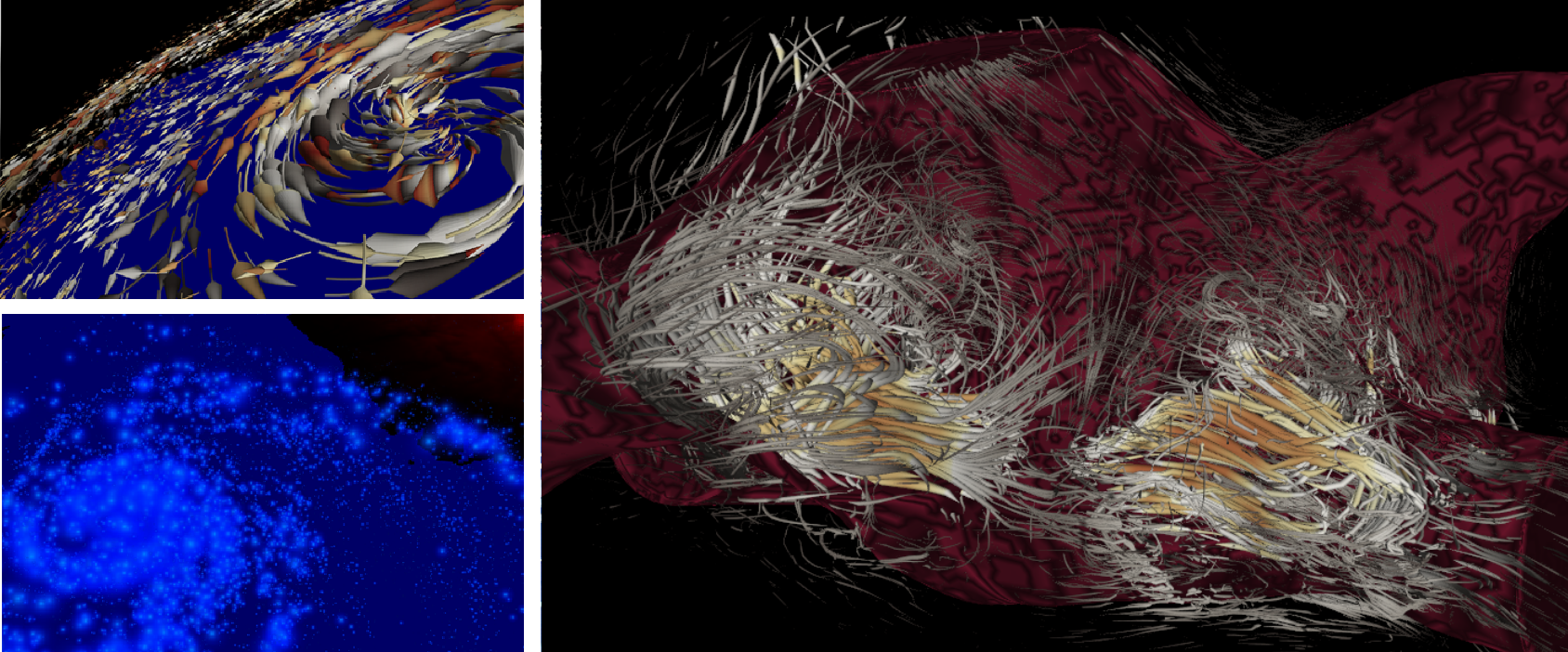

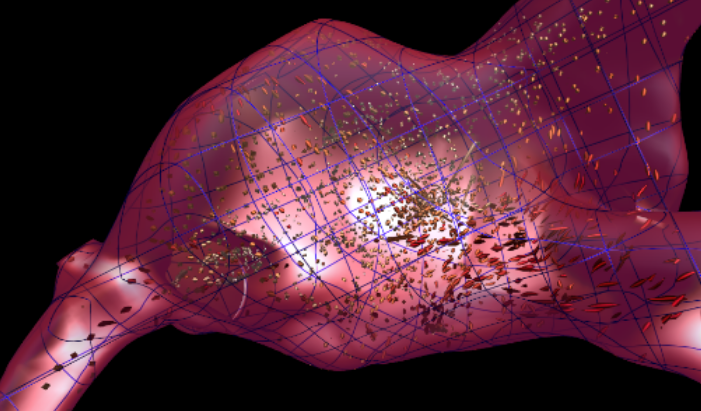

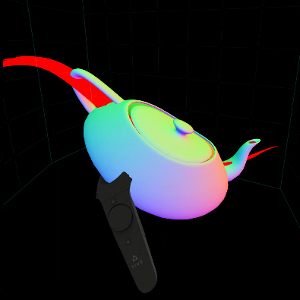

With the emergence of Virtual Reality (VR) and Augmented Reality (AR), perhaps soon it will be common practice for scientists and engineers to immerse themselves in their data, directly manipulating variables of interest. Imagine painting stress on a medical device, twisting a pathline to increase vorticity in a fluid, and stretching a solid object to change displacement. These organic, user-defined actions allow subject matter experts to ask questions of ill-defined systems in the same way we naturally experience reality.

To solve these types of problems, I explore ensemble visualization, parameter space analysis, semantic interaction, uncertainty, immersive environments, remote visualization, and high performance computing.

Please review my projects for more information on how these research areas fit together.

Research Interests

|

|

Professional and Teaching Experience

I currently have 20+ years of professional and teaching experience. This diverse background has fostered strong verbal, listening, and writing skills, complemented with a desire to teach others.

My professional life began at Dell Technologies where I worked as a website programmer for software and peripherals and search. After four years, I decided to pursue research on distributed systems with a job at Accenture Technology Labs. I then worked as a software contractor, a website designer, and finally as a graphics programmer before becoming a PhD student.

Throughout my professional career, occasionally between jobs, I have also pursued teaching. I started as a mathematics tutor as an undergraduate at Oklahoma State University. Later, I was trained by an alternative certification program to become a high school teacher in the state of Texas. It was in Minnesota, however, where I taught my first high school technology class. Naturally, as a PhD student I have had the opportunity to be a teaching assistant for several key graduate and undergraduate level classes.

For more information, please see my professional and teaching experience.

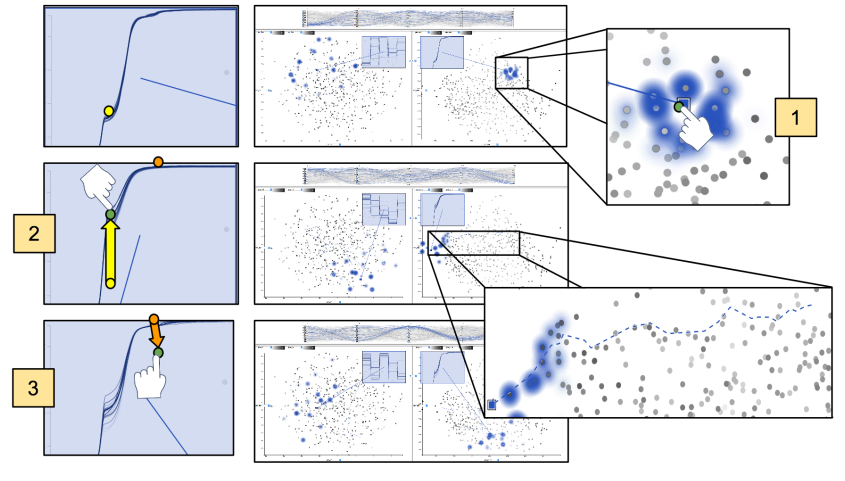

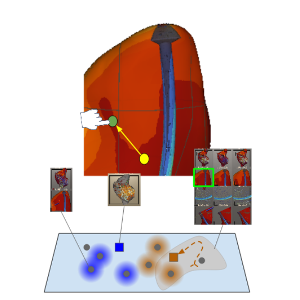

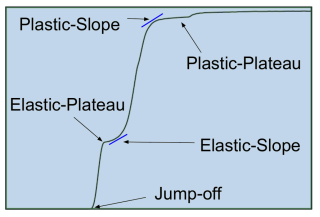

For example, in the shock physics application, there is a feature called the elastic plateau. A user can simply drag the elastic plateau and see how the other input and output values change. It also provides uncertainty visualization to get a better view of whether the prediction should be trusted in the context of the ensemble.

For example, in the shock physics application, there is a feature called the elastic plateau. A user can simply drag the elastic plateau and see how the other input and output values change. It also provides uncertainty visualization to get a better view of whether the prediction should be trusted in the context of the ensemble.